The consolidation of Cloudera and Hortonworks and the acquisition of MapR by HPE, leaves very little competition in Hadoop distribution market. AWS EMR has however emerged as a worthy challenger to the dominance of the Cloudera Hadoop distribution. In this blog we focus on the lessons learnt in relation to TCO savings by migrating on premise Hadoop clusters to AWS EMR.

As-is migration of Hadoop clusters from on premise to AWS EMR will not bring TCO savings.

Yes you heard it right, migrating Hadoop clusters as-is from an on-premise datacenter is less likely to bring any commercial benefits. It will definitely lower your dependency on man power required to maintain a Hadoop cluster but in terms of platform costs, one expects to see no difference.

Significant TCO savings can however be realized if the organization decides to make some simple changes to the architecture and data flows.

#1 TCO Saving strategy – Use a mix of SPOT and Dedicated compute capacity

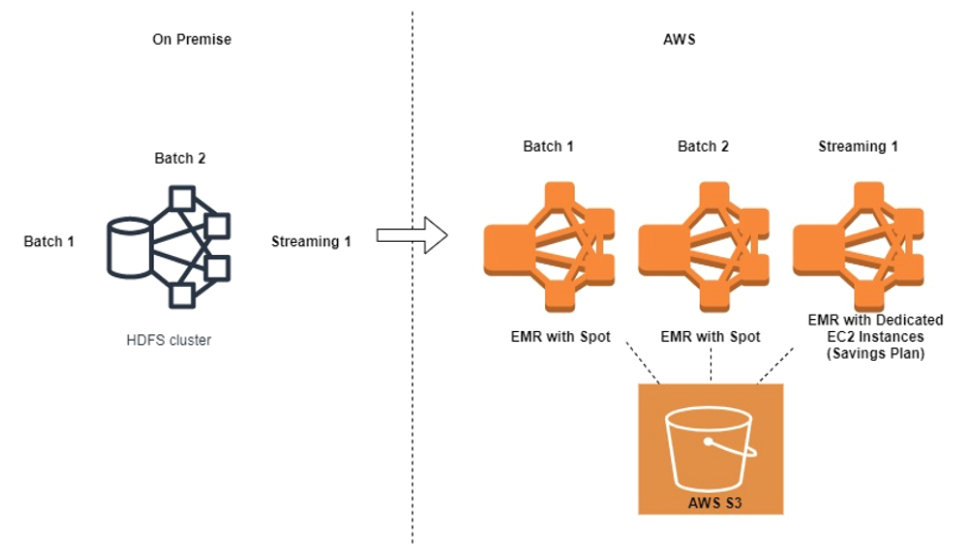

Neos IT has observed that customers typically have a mix of batch and streaming data processing jobs. Batch processing typically runs during certain time of the day and is very accommodating of varying compute capacities. Streaming data processing however tends to run 24x7 and is very sensitive to changes in compute capacity.

Hence it’s important to separate the batch workload from the streaming workload.

For the batch workloads one can achieve upto 70% savings in compute costs by utilizing the AWS Spot capacity on offer. AWS has been constantly improving the integration between AWS EMR and AWS Spot, making the feature extremely compelling for use with Spark workloads running on Hadoop.

#2 TCO Saving strategy – Choose the right region

Larger AWS Regions typically offer lower EC2 instance pricing. It’s surprising how much of a difference this makes to the TCO calculations.

Price of m3.2xlarge instance in EU Ireland.

Price of m3.2xlarge instance in EU Frankfurt.

The difference is approximately 7% per instance per hour. Combining spot pricing and the instance fleet feature in EMR, one can reduce the TCO by 10%, which is quite significant considering it’s a simple choice to make.

#3 TCO Saving strategy – Choose the right instances

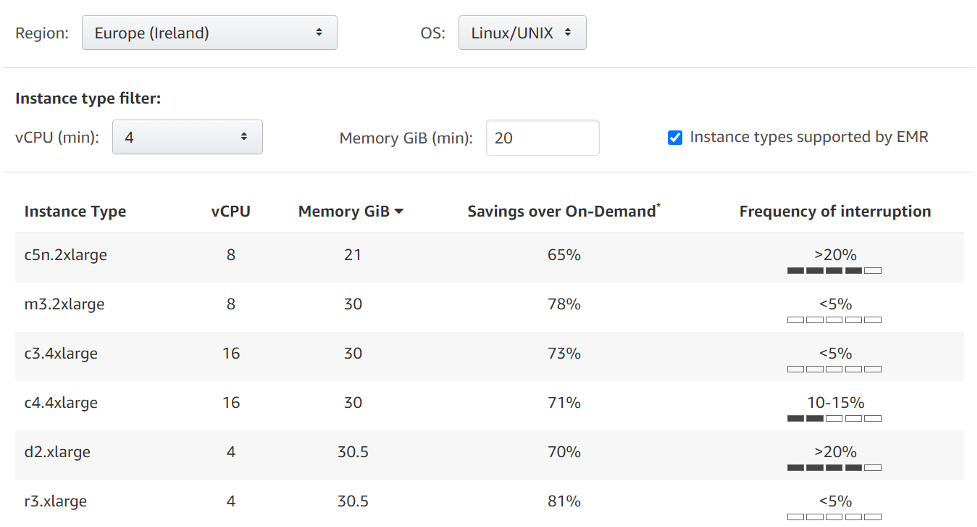

Neos IT recommends spending time to select the right EC2 instances for the AWS EMR Instance fleet feature. One can select up to FIVE instance types to run the spark jobs.

It’s highly recommended to right size the spark container size to keep it as small as possible. Typically small EC2 SPOT instances are less likely to be interrupted and hence reduce the chances of containers failing due to your EC2 SPOT capacity being recalled.

Use the AWS Spot advisor to select up to FIVE instances which are least likely to be interrupted. Use these as input for your AWS EMR Instance Fleet configuration.

#4 TCO Saving strategy – Replace HDFS with AWS S3

While some amount of refactoring might be required for Spark jobs to read and write from AWS S3 storage, the benefits make it a worthwhile investments. The Neos IT experience is that the refactoring requires very little development effort and is in most cases completed in just one sprint.

The recent announcements during the AWS Storage Day 2020 make lifecycle management of data seamless like never before. If your Hadoop clusters store a lot of inactive data only for purposes of archiving, S3 intelligent tiering is expected to reduce your storage costs by up to 25%.

A detailed assessment of your on-prem Hadoop cluster can help decide whether AWS EMR is the right choice for your strategic IT goals. Neos IT specializes in managing large Hadoop clusters both on-prem and in the cloud.

To avail an assessment of your Hadoop cluster migration benefits, contact one of our experts today.